系列文章

[TOC]

docker搭建hadoop集群

前置条件:jdk环境,zookeeper环境在前期需要安装好,这个可以看之前的文章。

准备条件:三台机器,10.8.46.35和10.8.46.197作为

master节点,10.8.46.190作为slave节点。上一步搭建的三台

zookeeper要保持正常以下命名三台机器都要操作。服务器要有

jdk环境。

1、 修改主机hostname为:hostnamectl set-hostname hadoop-01

10.8.46.35

hostnamectl set-hostname hadoop-01

[root@zookeeper-01-test opt]# hostname -f

hadoop-0110.8.46.197

hostnamectl set-hostname hadoop-0210.8.46.190

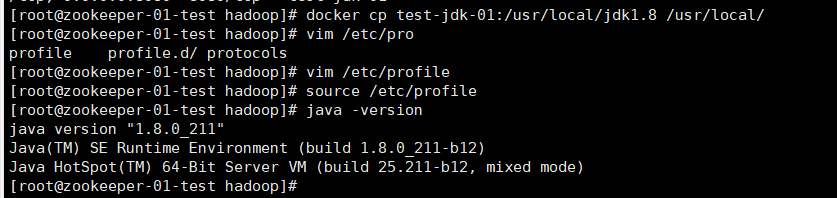

hostnamectl set-hostname hadoop-031.配置jdk环境(3台服务器都要)

docker cp test-jdk-01:/usr/local/jdk1.8 /usr/local/vim /etc/profile

# 在最后面添加这两句话

export JAVA_HOME=/usr/local/jdk1.8

export PATH=$JAVA_HOME/bin:$PATH让文件生效

source /etc/profile

将已下载好的Hadoop压缩包(hadoop-3.2.2.tar.gz)通过工具【Xftp】拷贝到虚拟主机的opt目录下

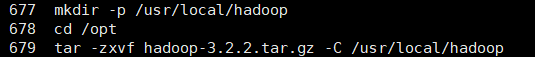

(1)解压安装包

mkdir -p /usr/local/hadoop

cd /opt

tar -zxvf hadoop-3.2.2.tar.gz -C /usr/local/hadoop

(2)编辑全局变量

vim /etc/profile

增加以下全局变量

export HADOOP_HOME=/usr/local/hadoop/hadoop-3.2.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export YARN_LOG_DIR=$HADOOP_LOG_DIR

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

#即时生效

source /etc/profile

免密设置:

ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.8.46.35免密传输

[root@hadoop-01 sbin]# scp ./start-yarn.sh 10.8.46.190:/usr/local/hadoop/hadoop-3.2.2/sbin

start-yarn.sh 100% 3427 4.7MB/s 00:00

[root@hadoop-01 sbin]# scp ./start-yarn.sh 10.8.46.197:/usr/local/hadoop/hadoop-3.2.2/sbin

start-yarn.sh(3)配置Hadoop

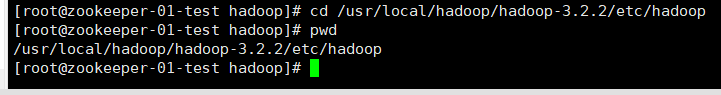

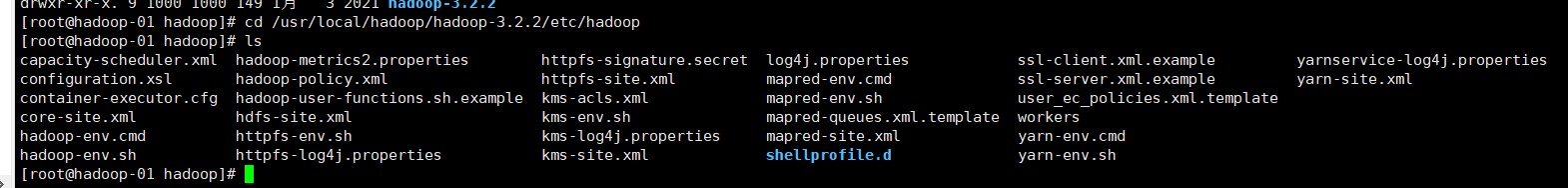

cd /usr/local/hadoop/hadoop-3.2.2/etc/hadoop

- 配置hadoop-env.sh

vim hadoop-env.sh将export JAVA_HOME=${JAVA_HOME}修改为安装的JDK路径

export JAVA_HOME=/usr/local/jdk1.8

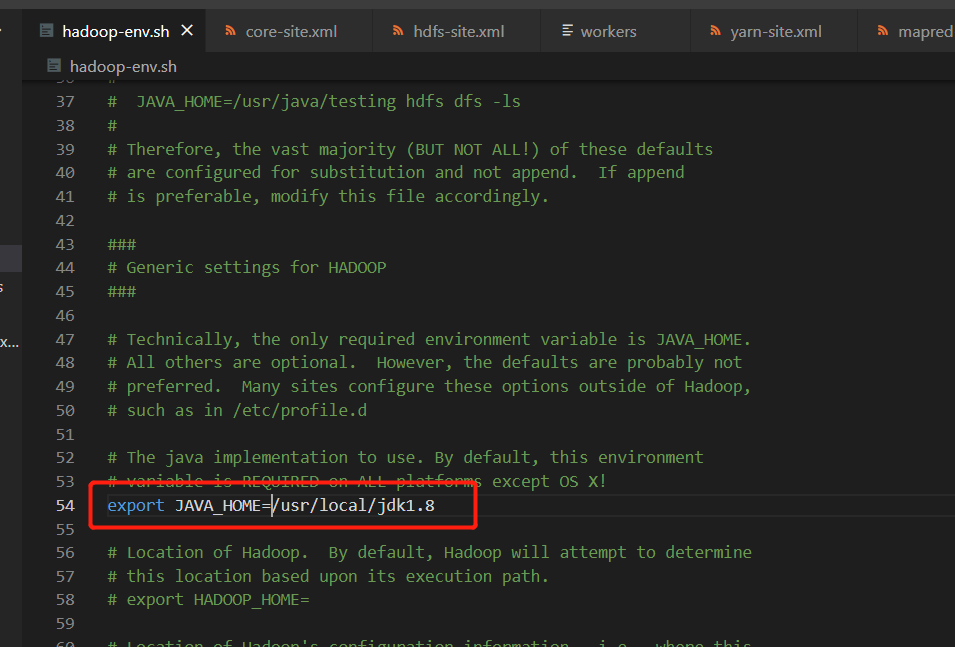

2.配置core-site.xml

cd /usr/local/hadoop/hadoop-3.2.2/etc/hadoop

vim core-site.xml<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!--HDFS路径逻辑名称-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-local</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<!--Hadoop存放临时文件位置-->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<!--使用的zookeeper集群地址-->

<property>

<name>ha.zookeeper.quorum</name>

<value>zookeeper-01-test:2181,zookeeper-02-test:2181,zookeeper-03-test:2181</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

<property>

<name>fs.trash.checkpoint.interval</name>

<value>1440</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.groups</name>

<value>*</value>

</property>

</configuration>

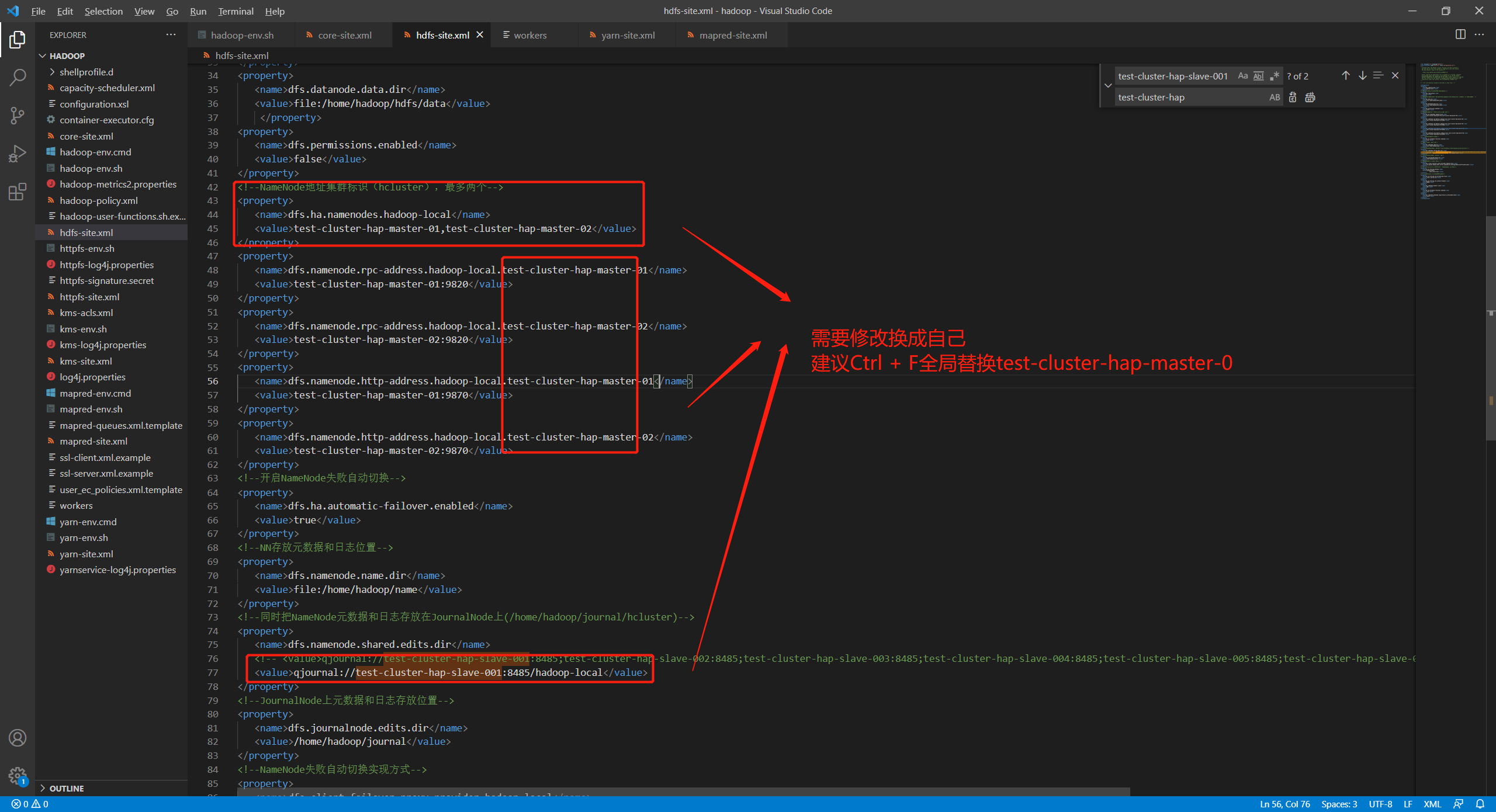

3.配置hdfs-site.xml

vim hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.nameservices</name>

<value>hadoop-local</value>

</property>

<!--数据副本数量,根据HDFS台数设置,默认3份-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--HDFS文件系统数据存储位置,可以分别保存到不同硬盘,突破单硬盘性能瓶颈,多个位置以逗号隔开-->

<property>

<name>dfs.data.dir</name>

<value>file:/home/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<!--NameNode地址集群标识(hcluster),最多两个-->

<property>

<name>dfs.ha.namenodes.hadoop-local</name>

<value>test-cluster-hap-master-01,test-cluster-hap-master-02</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-local.test-cluster-hap-master-01</name>

<value>test-cluster-hap-master-01:9820</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-local.test-cluster-hap-master-02</name>

<value>test-cluster-hap-master-02:9820</value>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-local.test-cluster-hap-master-01</name>

<value>test-cluster-hap-master-01:9870</value>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-local.test-cluster-hap-master-02</name>

<value>test-cluster-hap-master-02:9870</value>

</property>

<!--开启NameNode失败自动切换-->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--NN存放元数据和日志位置-->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hadoop/name</value>

</property>

<!--同时把NameNode元数据和日志存放在JournalNode上(/home/hadoop/journal/hcluster)-->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<!-- <value>qjournal://test-cluster-hap-slave-001:8485;test-cluster-hap-slave-002:8485;test-cluster-hap-slave-003:8485;test-cluster-hap-slave-004:8485;test-cluster-hap-slave-005:8485;test-cluster-hap-slave-006:8485;test-cluster-hap-slave-007:8485/hadoop-local</value> -->

<value>qjournal://test-cluster-hap-slave-001:8485/hadoop-local</value>

</property>

<!--JournalNode上元数据和日志存放位置-->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/journal</value>

</property>

<!--NameNode失败自动切换实现方式-->

<property>

<name>dfs.client.failover.proxy.provider.hadoop-local</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!--隔离机制方法,确保任何时间只有一个NameNode处于活动状态-->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence(hdfs)

shell(/bin/true)</value>

</property>

<!--使用sshfence隔离机制要SSH免密码认证-->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

</configuration>

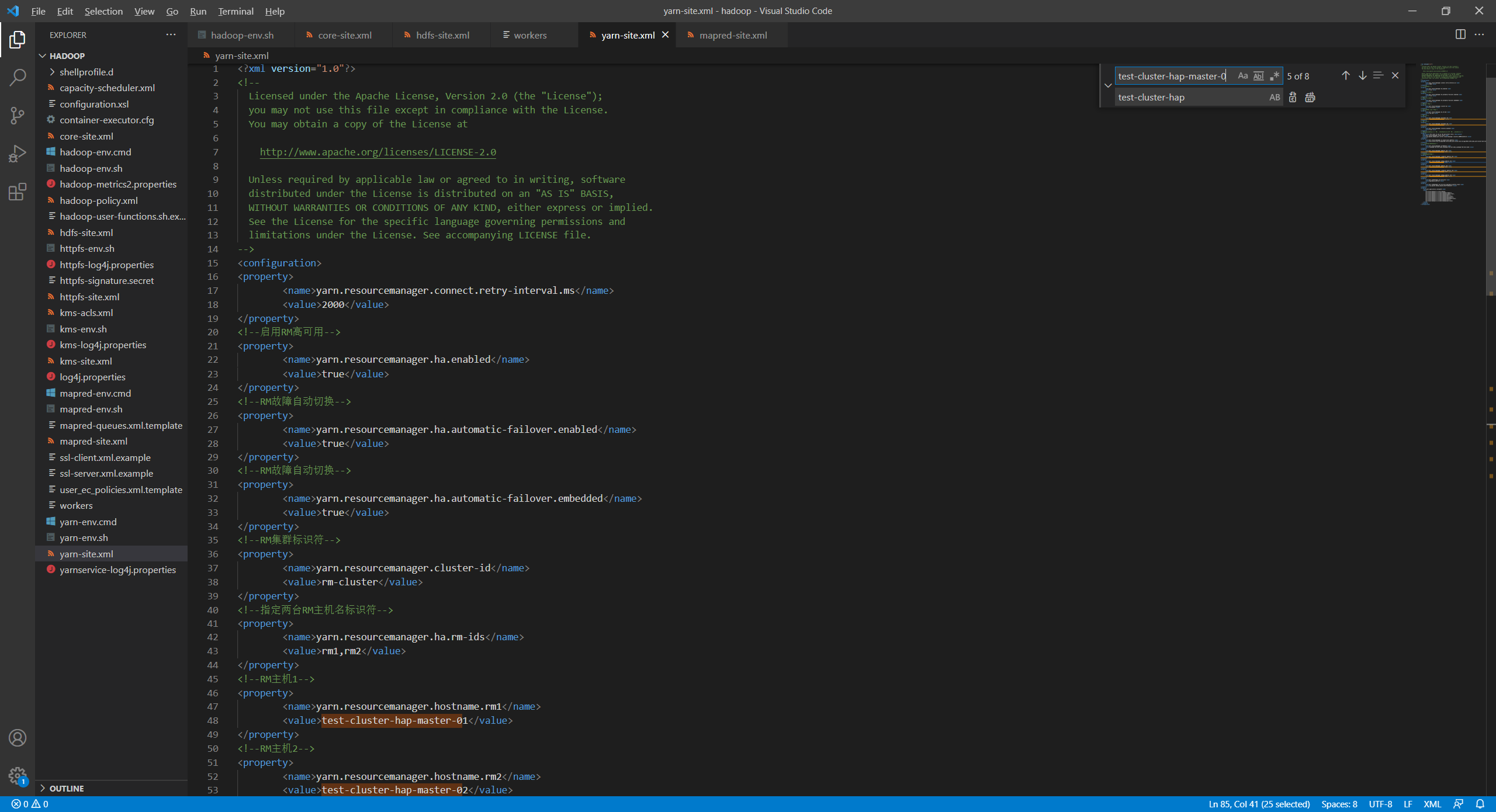

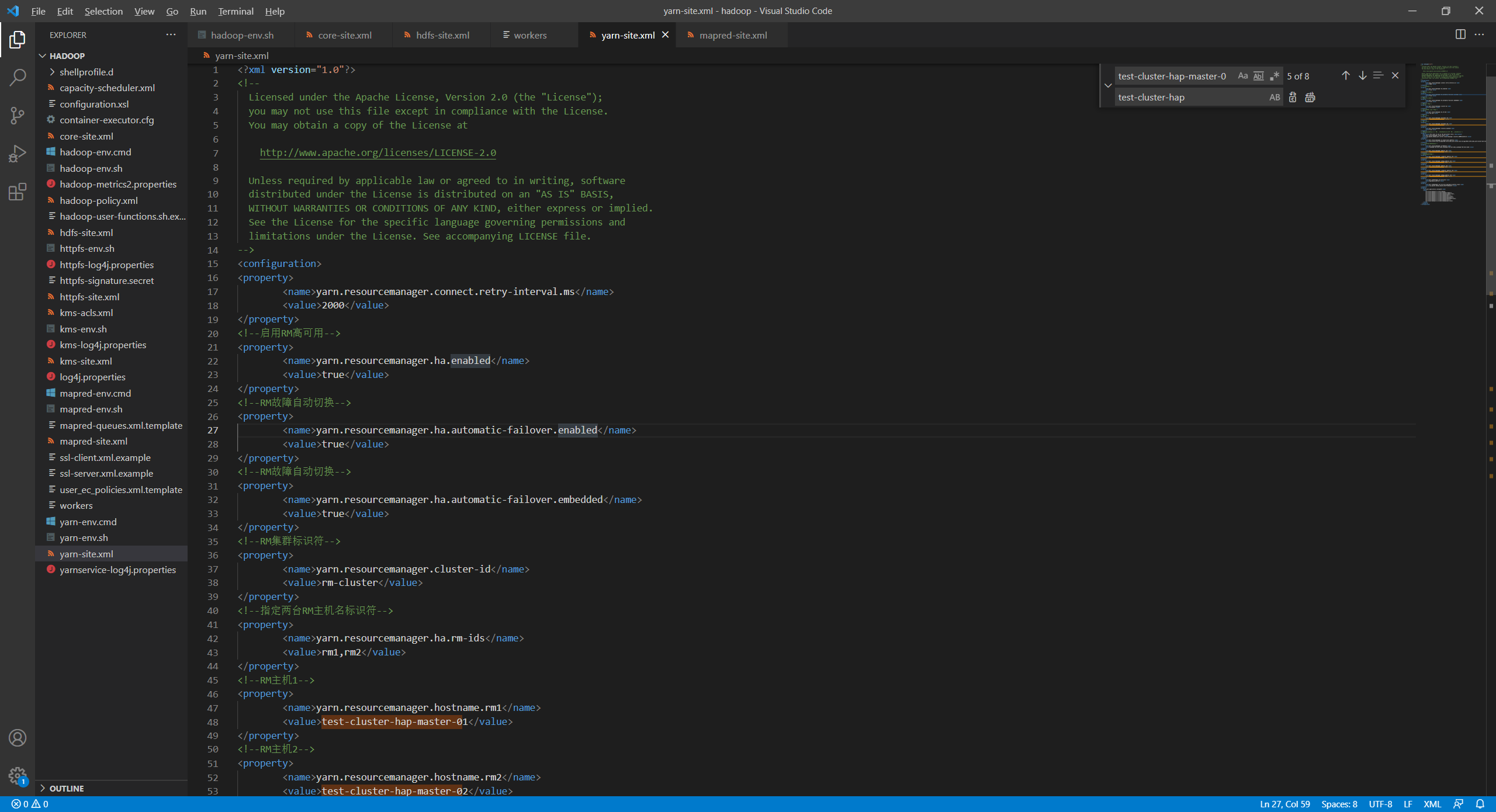

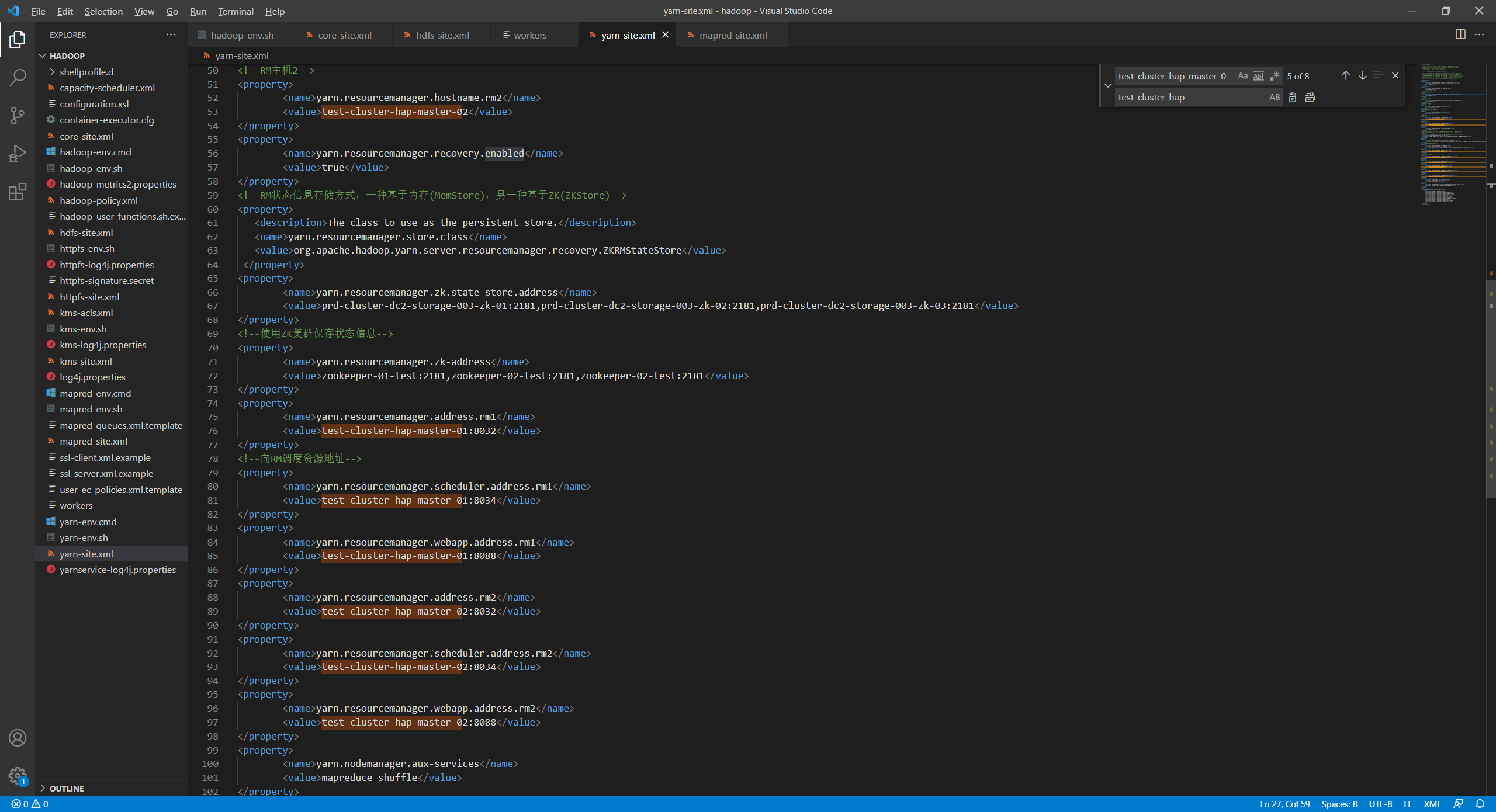

4.配置yarn-site.xml

vim yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<!--启用RM高可用-->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!--RM故障自动切换-->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--RM故障自动切换-->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<!--RM集群标识符-->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>rm-cluster</value>

</property>

<!--指定两台RM主机名标识符-->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!--RM主机1-->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>test-cluster-hap-master-01</value>

</property>

<!--RM主机2-->

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>test-cluster-hap-master-02</value>

</property>

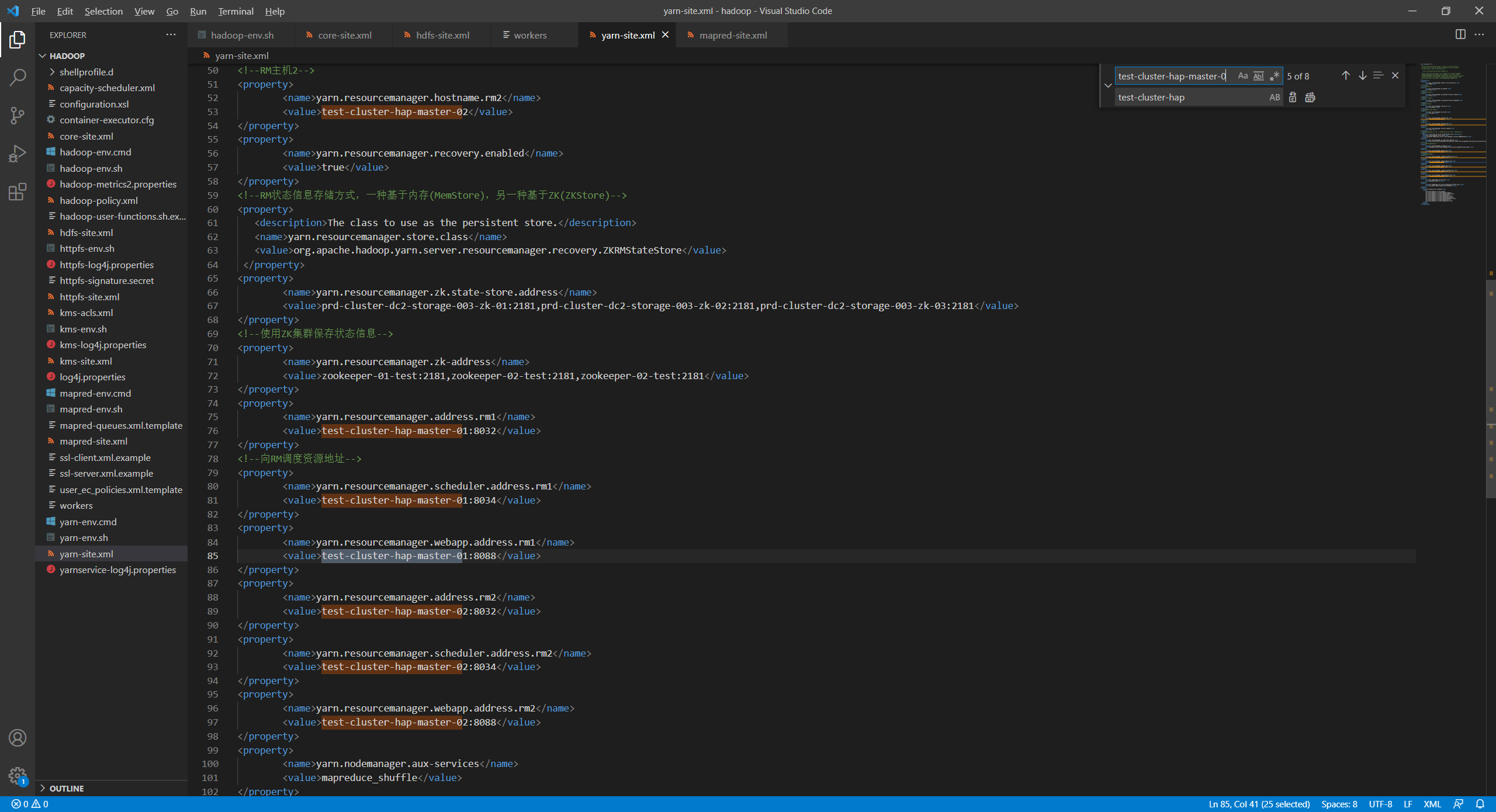

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!--RM状态信息存储方式,一种基于内存(MemStore),另一种基于ZK(ZKStore)-->

<property>

<description>The class to use as the persistent store.</description>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk.state-store.address</name>

<value>prd-cluster-dc2-storage-003-zk-01:2181,prd-cluster-dc2-storage-003-zk-02:2181,prd-cluster-dc2-storage-003-zk-03:2181</value>

</property>

<!--使用ZK集群保存状态信息-->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>zookeeper-01-test:2181,zookeeper-02-test:2181,zookeeper-02-test:2181</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>test-cluster-hap-master-01:8032</value>

</property>

<!--向RM调度资源地址-->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>test-cluster-hap-master-01:8034</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>test-cluster-hap-master-01:8088</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>test-cluster-hap-master-02:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>test-cluster-hap-master-02:8034</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>test-cluster-hap-master-02:8088</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>

/usr/local/hadoop-3.2.2/etc/hadoop,

/usr/local/hadoop-3.2.2/share/hadoop/common/*,

/usr/local/hadoop-3.2.2/share/hadoop/common/lib/*,

/usr/local/hadoop-3.2.2/share/hadoop/hdfs/*,

/usr/local/hadoop-3.2.2/share/hadoop/hdfs/lib/*,

/usr/local/hadoop-3.2.2/share/hadoop/mapreduce/*,

/usr/local/hadoop-3.2.2/share/hadoop/mapreduce/lib/*,

/usr/local/hadoop-3.2.2/share/hadoop/yarn/*,

/usr/local/hadoop-3.2.2/share/hadoop/yarn/lib/*

</value>

</property>

</configuration> |

|

|---|

5.配置mapred-site.xml

cp mapred-site.xml.template mapred-site.xml

vim mapred-site.xml<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<!--启用RM高可用-->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!--RM故障自动切换-->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--RM故障自动切换-->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<!--RM集群标识符-->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>rm-cluster</value>

</property>

<!--指定两台RM主机名标识符-->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!--RM主机1-->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>test-cluster-hap-master-01</value>

</property>

<!--RM主机2-->

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>test-cluster-hap-master-02</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!--RM状态信息存储方式,一种基于内存(MemStore),另一种基于ZK(ZKStore)-->

<property>

<description>The class to use as the persistent store.</description>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk.state-store.address</name>

<value>prd-cluster-dc2-storage-003-zk-01:2181,prd-cluster-dc2-storage-003-zk-02:2181,prd-cluster-dc2-storage-003-zk-03:2181</value>

</property>

<!--使用ZK集群保存状态信息-->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>zookeeper-01-test:2181,zookeeper-02-test:2181,zookeeper-02-test:2181</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>test-cluster-hap-master-01:8032</value>

</property>

<!--向RM调度资源地址-->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>test-cluster-hap-master-01:8034</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>test-cluster-hap-master-01:8088</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>test-cluster-hap-master-02:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>test-cluster-hap-master-02:8034</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>test-cluster-hap-master-02:8088</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>

/usr/local/hadoop-3.2.2/etc/hadoop,

/usr/local/hadoop-3.2.2/share/hadoop/common/*,

/usr/local/hadoop-3.2.2/share/hadoop/common/lib/*,

/usr/local/hadoop-3.2.2/share/hadoop/hdfs/*,

/usr/local/hadoop-3.2.2/share/hadoop/hdfs/lib/*,

/usr/local/hadoop-3.2.2/share/hadoop/mapreduce/*,

/usr/local/hadoop-3.2.2/share/hadoop/mapreduce/lib/*,

/usr/local/hadoop-3.2.2/share/hadoop/yarn/*,

/usr/local/hadoop-3.2.2/share/hadoop/yarn/lib/*

</value>

</property>

</configuration> |

|

|---|

6.编辑workers

test-cluster-hap-slave-001

7.编辑start

start-dfs.sh和stop-dfs.sh添加同样的内容,三台服务器都要!

vim /usr/local/hadoop/hadoop-3.2.2/sbin/start-dfs.sh

vim /usr/local/hadoop/hadoop-3.2.2/sbin/stop-dfs.sh

添加

HDFS_DATANODE_USER=root

HDFS_NAMENODE_USER=root

HDFS_ZKFC_USER=root

HDFS_JOURNALNODE_USER=rootstart-yarn.sh添加

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

还有一种方法是通过免密传输。需要提前设置免密

在同一台服务器上配置好,然后发送到另一台服务.

vim start-dfs.sh

scp ./start-dfs.sh 10.8.46.197:/usr/local/hadoop/hadoop-3.2.2/sbin

scp ./start-dfs.sh 10.8.46.190:/usr/local/hadoop/hadoop-3.2.2/sbin

vim start-yarn.sh

scp ./start-yarn.sh 10.8.46.190:/usr/local/hadoop/hadoop-3.2.2/sbin

scp ./start-yarn.sh 10.8.46.197:/usr/local/hadoop/hadoop-3.2.2/sbin8.创建目录

mkdir -p /home/hadoop/hdfs/data

mkdir -p /home/hadoop/name

mkdir -p /home/hadoop/journal

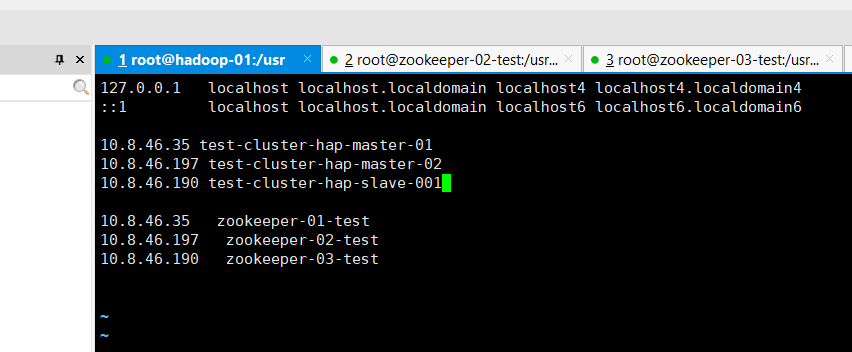

mkdir -p /home/hadoop/tmp9.添加hosts(每台服务器都要)

vim /etc/hosts

10.8.46.35 test-cluster-hap-master-01

10.8.46.197 test-cluster-hap-master-02

10.8.46.190 test-cluster-hap-slave-001

10.启动Hadoop

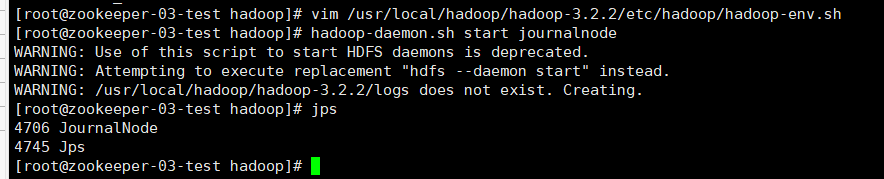

1、在每个Slave节点(slave-001-Hadoop-test、slave-002-Hadoop-test、slave-003-Hadoop-test)机器上运行命令

我这里就10.8.46.190服务器(slave节点)

hadoop-daemon.sh start journalnode2、对NameNode(test-cluster-hap-master-01)节点进行格式化(格式化前启动zookeeper配置好hosts)

hadoop namenode -format3、启动test-cluster-hap-master-01(active)节点NameNode

hadoop-daemon.sh start namenode4、test-cluster-hap-master-02节点上同步(test-cluster-hap-master-01)元数据

hdfs namenode -bootstrapStandby #实际上是将test-cluster-hap-master-01机器上的current文件夹同步过来5、启动test-cluster-hap-master-02(standby)节点NameNode

hadoop-daemon.sh start namenode6、在test-cluster-hap-master-01格式化ZKFC

hdfs zkfc -formatZK7、在test-cluster-hap-master-01节点启动HDFS集群

start-dfs.sh8、启动ResourceManager(test-cluster-hap-master-01机器)

start-yarn.sh成功之后

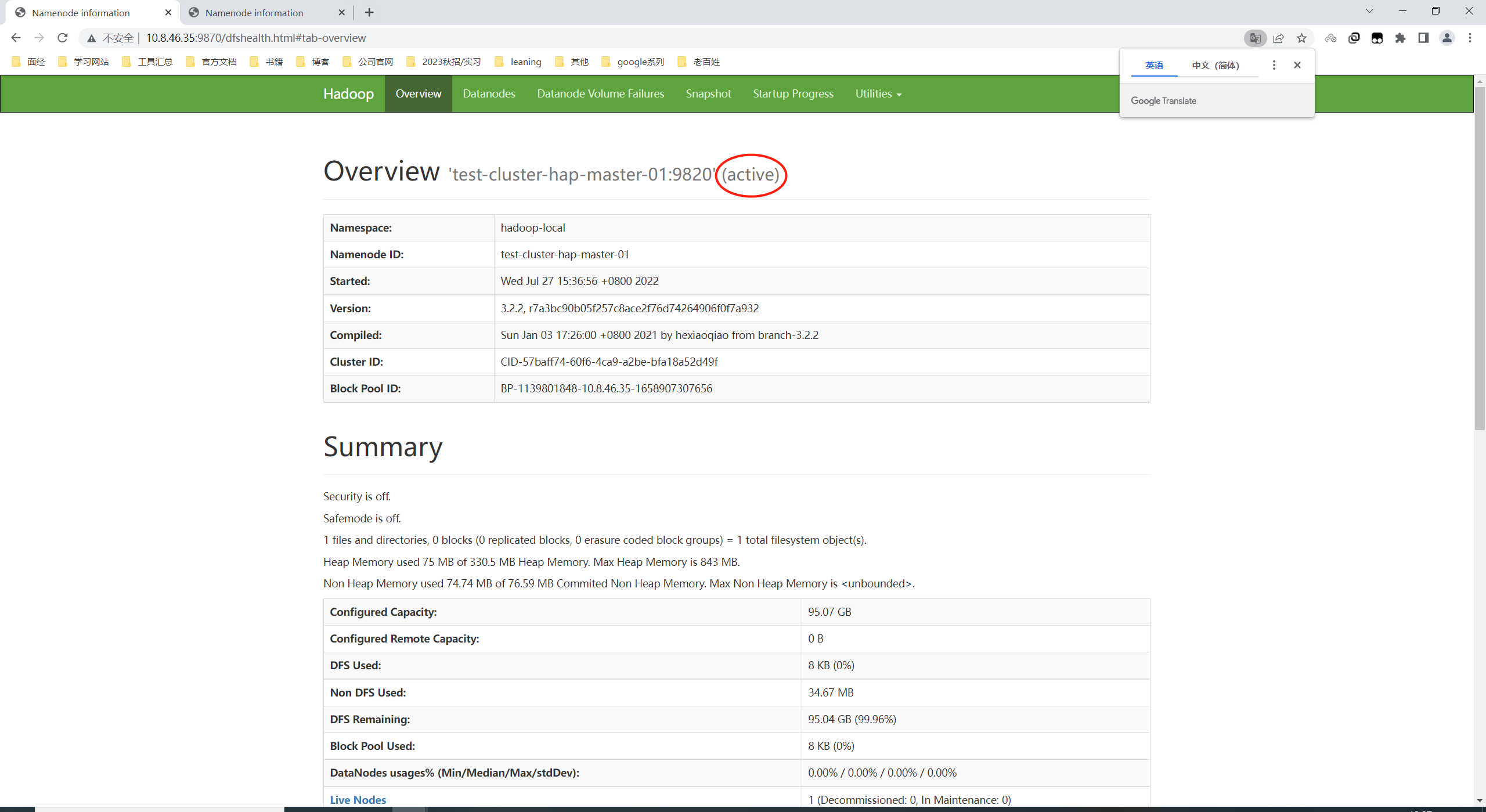

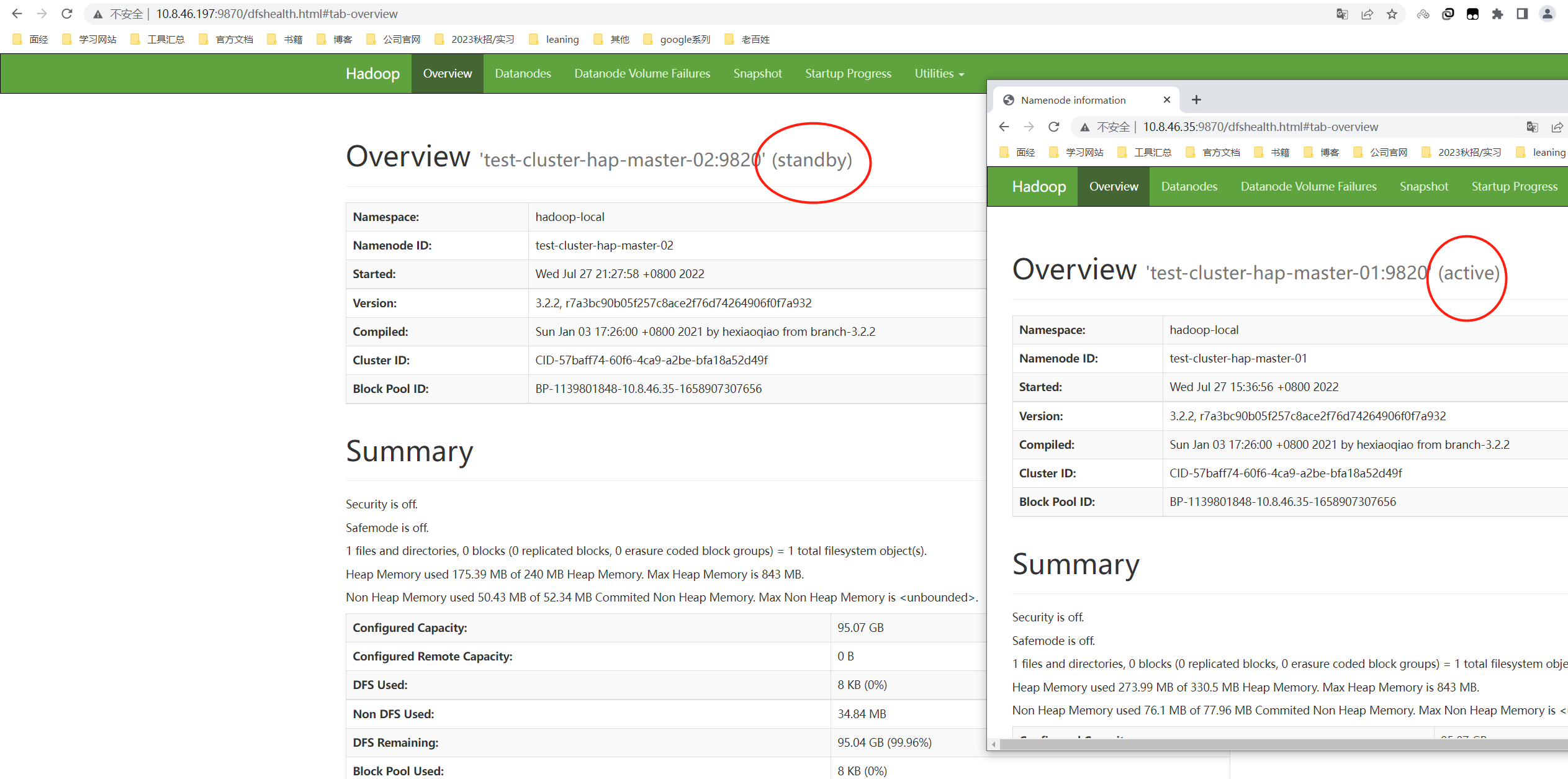

访问 test-cluster-hap-master-01节点http://10.8.46.35:9870/

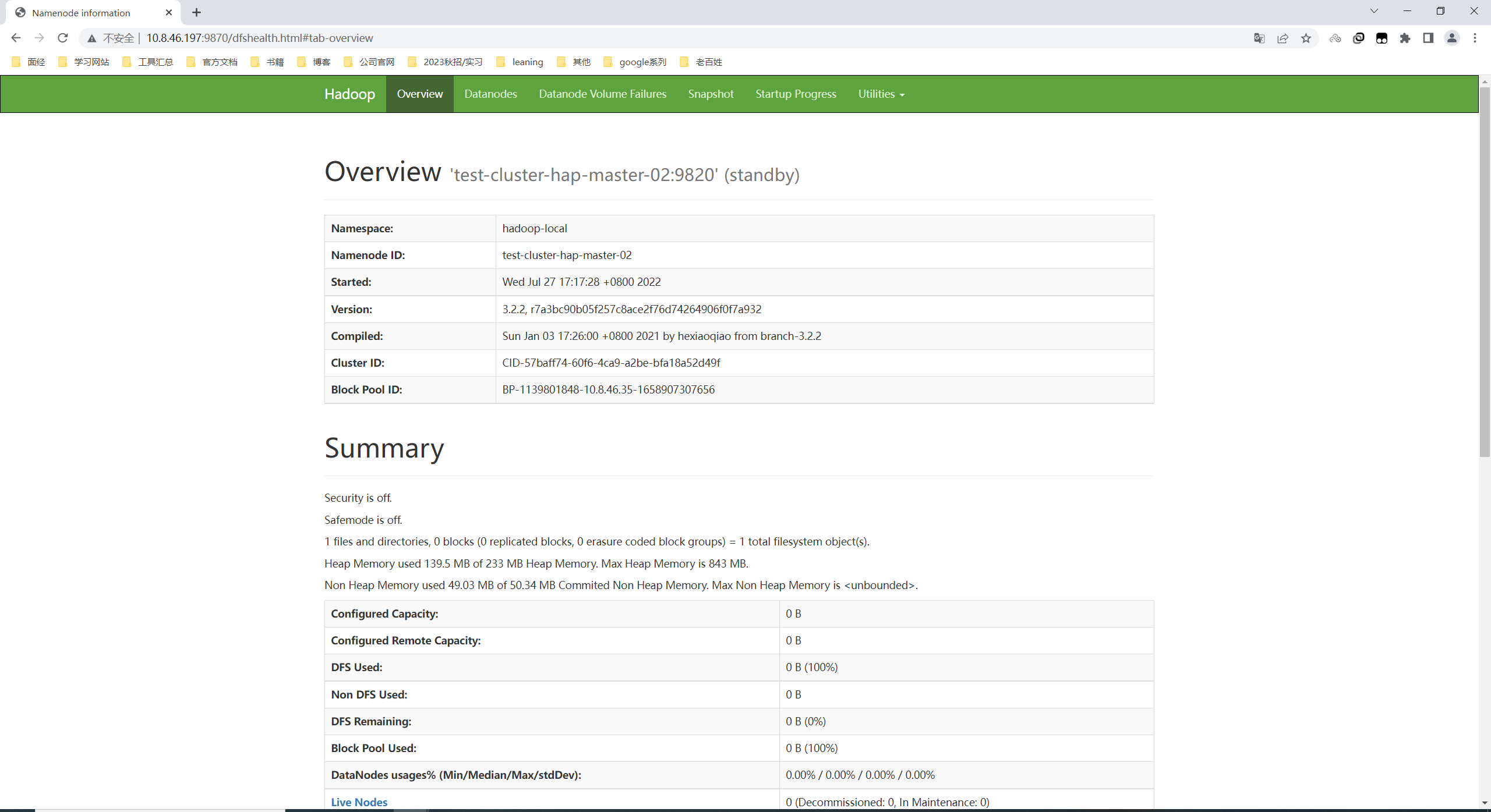

访问 test-cluster-hap-master-02节点http://10.8.46.197:9870/

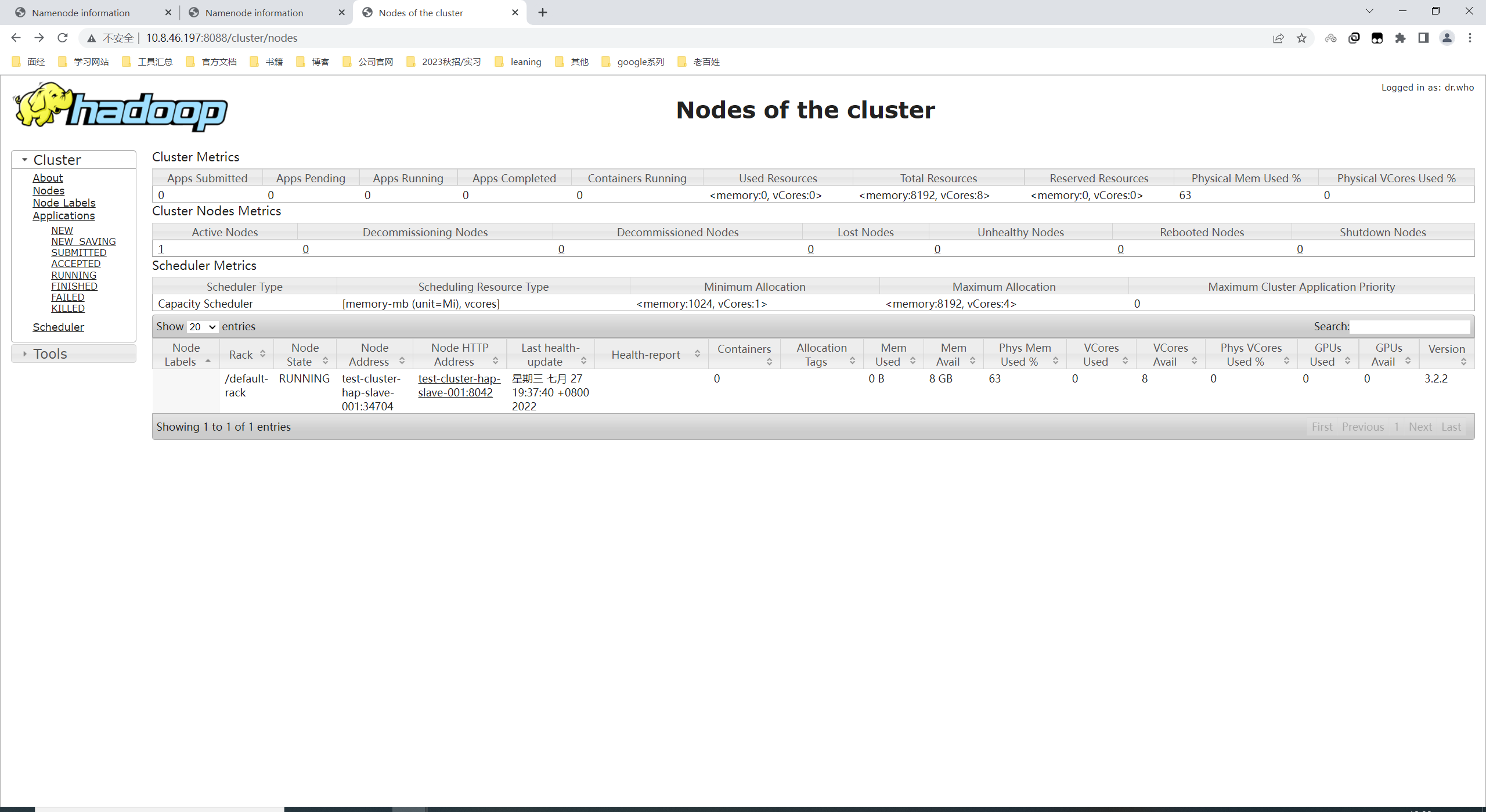

访问10.8.46.190 test-cluster-hap-slave-001节点http://10.8.46.197:8088/cluster/nodes

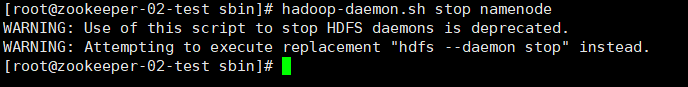

11.验证master节点

把test-cluster-hap-master-02(standby)节点NameNode停掉

hadoop-daemon.sh stop namenode

| 前 | 刷新后 |

|---|---|

|

|

| 启动 hadoop-daemon.sh start namenode 访问 | |

|

done~

[root@zookeeper-03-test hadoop]# vim /usr/local/hadoop/hadoop-3.2.2/etc/hadoop/hadoop-env.sh

[root@zookeeper-03-test hadoop]# hadoop-daemon.sh start journalnode

WARNING: Use of this script to start HDFS daemons is deprecated.

WARNING: Attempting to execute replacement "hdfs --daemon start" instead.

WARNING: /usr/local/hadoop/hadoop-3.2.2/logs does not exist. Creating.

[root@zookeeper-03-test hadoop]# jps

4706 JournalNode

4745 Jps

[root@zookeeper-03-test hadoop]#

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=rootvim /usr/local/hadoop/hadoop-3.2.2/sbin/start-yarn.sh

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=rootvim /usr/local/hadoop/hadoop-3.2.2/sbin/stop-yarn.sh

cd /etc/hadoop/hadoop.env

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export HDFS_ZKFC_USER=rootexport HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root